A corpus (plural, “corpora”) is a set of documents (or sometimes, individual sentences) that have been hand-annotated with the correct values to be learned. The machine-learning paradigm calls instead for using general learning algorithms - often, although not always, grounded in statistical inference - to automatically learn such rules through the analysis of large corpora of typical real-world examples. Prior implementations of language-processing tasks typically involved the direct hand coding of large sets of rules. The paradigm of machine learning is different from that of most prior attempts at language processing. “Modern NLP algorithms are based on machine learning, especially statistical machine learning. This snippet from Wikipedia is interesting. The more you use this NLP software, the more it knows and understands what to do.

#DRAGON NATURALLYSPEAKING HOME 13.0 ENGLISH SOFTWARE#

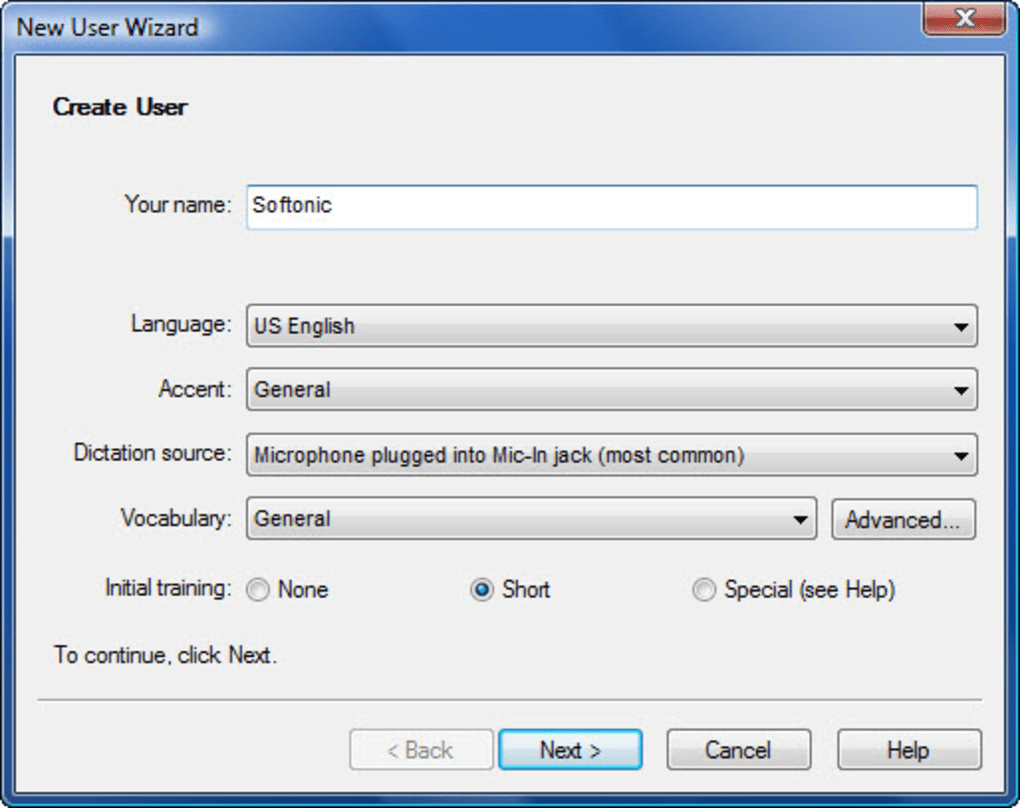

The software will adapt to each individual and with time, the auto transcription becomes more accurate. Dragon NaturallySpeaking is a great example of a machine learning tool. Machine learning is also of great importance in NLP. Differential equations are of particular importance when refining NLP processes. The physics behind sound and the ability to capture and reproduce sound accurately is complex and fascinating. Natural Language Processing is particularly relevant to human robotic interaction. Dragon NaturallySpeaking is the clear frontrunner in the NLP market. Dragon NaturallySpeaking Home 13.0, English We’ve seen incredible progress in the field of Natural Language Processing (NLP).

0 kommentar(er)

0 kommentar(er)